In the world of artificial intelligence, language models have witnessed an extraordinary evolution. As major tech players like OpenAI, Meta, and Google churn out colossal models featuring hundreds of billions of parameters, the sheer scale showcases a promise of accuracy and comprehension that was once unimaginable. These parameters act as intricate knobs that connect different pieces of data, adjusting their relationships during the training phase. The more parameters these models have, the better they become at discerning complex patterns within data. However, such advancements do not come without significant drawbacks—specifically, immense computational demands and escalating energy costs.

Take Google’s Gemini 1.0 Ultra as an illustrative example, purportedly costing the company a staggering $191 million to train. Alongside this expenditure are the operational costs related to energy consumption, with estimates suggesting that a single interaction with a large language model can consume approximately tenfold the energy used in a routine Google search. The environmental implications, coupled with financial constraints, have spurred a rethink in the AI community about the sustainability and accessibility of these models.

A Shift Towards Small Language Models

In light of the challenges posed by large language models (LLMs), attention has shifted toward the development of small language models (SLMs), which comprise a fraction of the parameters—often limited to around ten billion. Although these models are not designed for broad, general applications, SLMs shine in highly specialized tasks such as health care chatbots, data gathering in IoT devices, and streamlined conversation summaries. Zico Kolter from Carnegie Mellon University emphasizes this capability by stating that an SLM, even with merely 8 billion parameters, can perform quite effectively in specific contexts. The ability to deploy these smaller models on consumer-grade devices like laptops and smartphones represents a meaningful departure from the dependency on colossal data centers.

Innovative Strategies Enhancing Small Models

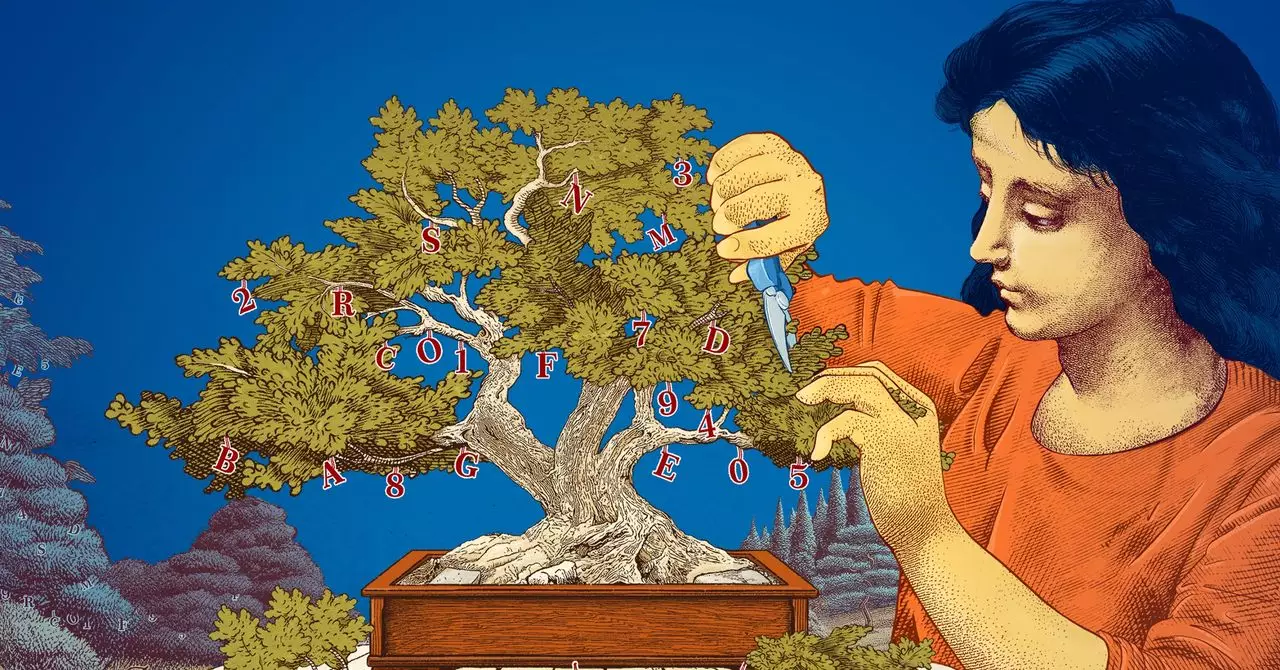

The development of SLMs is powered by novel strategies that optimize their training processes. For one, knowledge distillation emerges as a transformative technique wherein larger models scrape extensive data sets but generate a refined, high-quality data set for their smaller counterparts. Essentially, it’s akin to an experienced teacher imparting their wisdom to a diligent student, ensuring that the crucial insights are retained while outdated or messy data are filtered out. Kolter suggests that this meticulous approach enables SLMs to remain substantial performers despite the limited data sets.

Additionally, researchers have drawn inspiration from the human brain to complicate less-than-optimal neural network connections. Pruning, as this method is known, reduces the excess parameters from established large models, streamlining them for specific tasks without sacrificing efficiency. The concept of “optimal brain damage,” first proposed by artificial intelligence pioneer Yann LeCun, reveals that a significant percentage of parameters in a trained neural network can be eliminated. Such pruning can not only enhance the model’s focus but also increase its operational efficiency, paving the way for tailored applications.

The Merits of Smaller Models: Approachability and Experimentation

From a research perspective, SLMs provide a valuable sandbox for testing innovative ideas, allowing researchers to explore uncharted territories with reduced financial and technical stakes. They offer a less perilous route for experimentation, where researchers can try new methodologies and approaches without the fear of significantly high costs associated with large-scale models. Leshem Choshen from the MIT-IBM Watson AI Lab argues that smaller models enable science to advance through iterative testing, suggesting that their simplicity thereby fosters ingenuity and explorational vigor.

Moreover, with fewer parameters, SLMs often present an advantage in transparency. Researchers can conduct more thorough examinations, revealing the underlying decision-making processes in these models. As society grows increasingly cautious about the ethical implications of AI systems, striving for transparency stands as a critical objective. These small models might not only help alleviate cost concerns but also make strides toward ethical AI development.

The Continuum of Model Applications: Where Small Meets Large

While the need for large, complex models will persist in domains that demand considerable depth, such as advanced chatbots, image generation, and drug discovery, the growing recognition of small models provides a refreshing alternative. Their capacity to operate effectively in niche environments—paired with the advantages of lower computational costs and a user-friendly model training experience—positions them as valuable tools in the ongoing AI revolution.

As researchers work to find common ground between the expansive capabilities of LLMs and the focused precision of SLMs, it becomes clear that the future landscape of artificial intelligence is not exclusively defined by size but rather by intent and efficiency. These advances herald not just a new era of model design but also signify a conceptual shift in how we understand and apply artificial intelligence in our daily lives.

Leave a Reply