The digital landscape we navigate daily is constantly evolving, with privacy emerging as one of the most critical concerns among users. Recently, Meta has rolled out a new feature in its messaging platforms—Facebook, Instagram, Messenger, and WhatsApp—that utilizes AI tools to enhance user experience. However, this innovation comes hand-in-hand with significant privacy implications, raising important questions about user data management and security.

Meta’s latest messaging enhancement allows users to engage with an AI assistant directly within their chat conversations. This integration enables users to ask questions and receive real-time assistance by simply summoning Meta AI, typically through a mention like @MetaAI. At first glance, the feature promises convenience and improved interaction; however, it also introduces a layer of complexity concerning what happens to the information shared within these conversations.

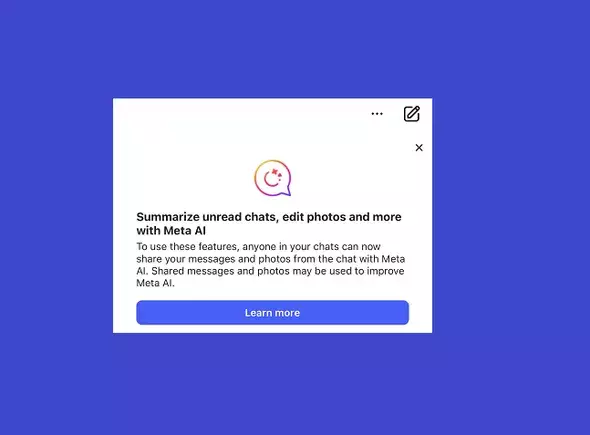

The new feature is heralded as a step towards more interactive and responsive communication. Yet, users are met with a critical caveat—any information shared in chats could potentially be processed and utilized to train the AI systems. This point is emphasized by Meta’s pop-up notifications within the app, which serve to remind users to be cautious about sharing sensitive data, such as financial information or personal identifiers.

Privacy Concerns and User Awareness

While the utility of AI in enhancing user experience is indisputable, the associated privacy risks cannot be overlooked. Meta has taken steps to inform users, yet the effectiveness of such warnings is subject to debate. The inherent problem lies in the complexity of privacy agreements that users often skim over. Most people are unaware that they have already consented to such data usage when they signed up for these platforms. The legalese contained within those agreements often obscures the realities of data sharing, leaving users vulnerable to unintentional exposure.

Moreover, the implications of sharing private messages are profound. Even if the AI capabilities offer a valuable service, the potential for sensitive information to be extracted and utilized beyond its original conversational context leaves a sour taste. Users are now faced with the difficult choice of either adopting this new technology for its benefits or sacrificing their privacy for the sake of familiarity.

Meta’s current framework does not allow users the option to completely opt out of having their information used within AI systems. Instead, the company suggests alternative measures, such as refraining from direct engagements with the AI in chats or even deleting sensitive conversations entirely. While this may sound easy, these solutions point to a complicated relationship between user convenience and data privacy—a dichotomy that is increasingly difficult to reconcile.

For those who wish to maintain their privacy while still utilizing AI features, the recommendation to use a separate chat purely for Meta AI interactions seems like a practical approach. This method could theoretically minimize the risk of sensitive information being processed, but it also suggests a disconnect in the app’s design. If the AI integration is beneficial enough to warrant its inclusion in conversations, the need for users to switch contexts diminishes that convenience. As a result, users might feel overwhelmed by the implications of data sharing in casual messaging.

Meta’s introduction of AI into its messaging apps reflects a broader trend towards integrating advanced technologies into daily communications. However, this progress does not come without repercussions. Users must navigate the delicate balance between leveraging technological advancements and safeguarding their privacy.

As companies like Meta continue to expand their AI capabilities, the onus is on users to remain informed and proactive. Understanding the ramifications of data sharing and being vigilant about what is shared in conversations becomes increasingly important. In a world where the lines between convenience and privacy are beginning to blur, users must ensure that their digital footprints align with their personal comfort levels. Ultimately, the future will require a more transparent approach from tech companies, ensuring that privacy is not sacrificed at the altar of innovation.

Leave a Reply