In the ever-evolving realm of digital security, tech giants like Meta are caught in a precarious balancing act between vigilant protection and the demand for privacy. Recently, Meta has stepped back into the contentious field of facial recognition with new strategies aimed at tackling online scams and account security. While the intention behind these advancements is undoubtedly commendable, the potential repercussions warrant a closer examination.

One of the notable initiatives underway is aimed at combating the insidious trend of “celeb-bait” scams. These fraudulent schemes exploit the images of public figures to lure unsuspecting users into engaging with deceptive advertisements, leading them to malicious websites. To counter this, Meta is employing a facial matching process that compares images used in ads to the official profiles of well-known personalities. Should a match occur, Meta promises to validate whether the ad is an endorsed promotion, claiming to block any identified scams efficiently.

This proactive approach highlights Meta’s understanding of digital marketing manipulations, though it raises immediate flags regarding user privacy. The company emphasizes that any facial data generated during this process will be discarded immediately after the one-time comparison, aiming to alleviate concerns about the misuse of such information. However, given its troubled history with facial recognition—from privacy breaches to mass data collection—many users remain skeptical of these assurances.

Meta, which previously shuttered its facial recognition features due to widespread criticism, is now cautiously re-engaging with this technology. In a climate where the misuse of facial recognition tools is rampant—from surveillance in public spaces to oppressive regimes targeting marginalized communities—Meta’s decision to reintroduce any form of this technology appears fraught with peril. As privacy advocates have rightly pointed out, the implications of having faces matched and monitored can create a dangerous avenue for abuse, especially if data leaks or unauthorized access occurs.

For instance, adversarial uses of facial recognition technologies have been documented worldwide, including their deployment by state authorities for social monitoring or punitive measures against minority communities. As Meta launches its new initiatives, the shadow of these chilling applications looms large, prompting a necessary dialogue around the ethical boundaries of facial recognition deployment.

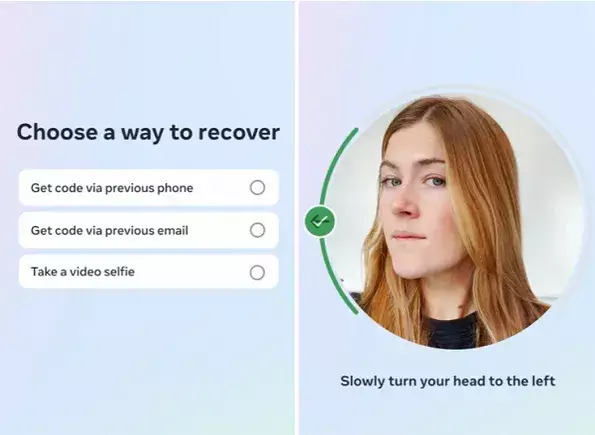

In tandem with combating celeb-bait scams, Meta is also trialing the use of video selfies for secure account recovery processes. This innovative method entails users providing a video selfie, which is then compared to existing profile images for identity verification. While this system may mirror practices common in mobile access security, its ramifications for user privacy and data security cannot be overlooked.

Meta pledges that the video selfies will be encrypted and securely stored with no visibility on user profiles. Like their approach to celebrity image verification, the company emphasizes that any facial data collected will be deleted after a comparison has been made. This contention raises a fundamental question—can users genuinely trust Meta to follow through on its promises of data deletion and security? Given its track record, the latent doubts surrounding the firm’s grasp of privacy issues could resonate profoundly among users, resulting in potential hesitance to adopt such features.

As Meta forges ahead with these exploratory initiatives, privacy concerns will undoubtedly remain at the forefront of public scrutiny. The nuanced balance between enhanced security and the preservation of personal privacy is fraught with tension. While leveraging facial recognition technology offers efficiencies in fraud detection and identity validation, the historical abuses of such data render a significant hurdle in gaining user trust.

As regulatory bodies in Western nations grapple with how to legislate the use of facial recognition technology, Meta’s return to deploying these tools will only intensify existing scrutiny. The potential backlash from privacy advocates and users concerns about personal data mismanagement could stymie adoption efforts. Simply put, the tech world is not just looking at utilization maps or algorithmic improvements; it is critically observing the ethical considerations at play.

While Meta’s foray into improved security measures through facial recognition possesses merit, it is cloaked in complexity and apprehension. The ongoing unease surrounding privacy, data security, and the moral imperative of using such technologies cannot be overstated. As Meta navigates this convoluted landscape, it must not only prioritize the effectiveness of its security measures but must do so in a way that earns and maintains user trust. The path forward is fraught with challenges, but with careful navigation of these ethical waters, perhaps a new standard for responsible tech use can emerge.

Leave a Reply