In an era marked by rapid advancements in technology, the intersection of human cognition and automated systems presents both opportunities and challenges, particularly in industries reliant on quality assessment, such as agriculture and food production. A recent study conducted by researchers at the Arkansas Agricultural Experiment Station offers promising insights into developing a machine-learning application that may one day aid consumers in selecting the best produce while simultaneously enhancing food quality evaluations. This article explores the nuances of the research findings and the implications for future advancements in food technology.

Human senses play a crucial role in determining the quality of food products. Traditionally, one might rely on visual cues to gauge the freshness of fruits and vegetables. However, as humans are inherently susceptible to environmental factors such as lighting, variations in perception can lead to inconsistent judgments about food quality. This study, led by Dongyi Wang and published in the Journal of Food Engineering, highlights the disparity between human assessment and machine predictions, particularly in varying light conditions. By understanding how human perception can be affected, researchers aim to create machine-learning models that are not only accurate but also adaptable to these variables.

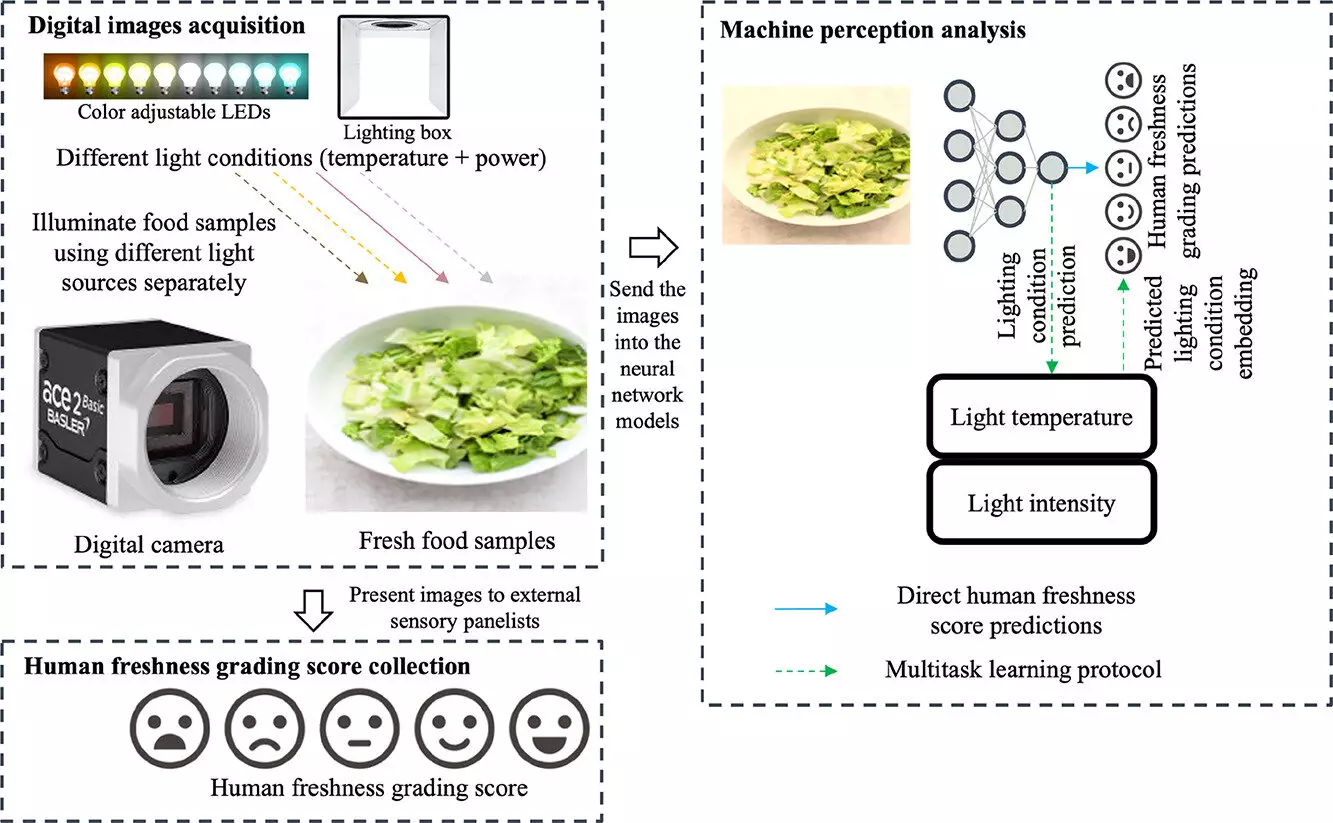

The researchers focused on lettuce for their study. They recruited 109 participants, carefully selected to ensure none had visual impairments or color blindness. Over several days, these participants evaluated hundreds of images of Romaine lettuce, each captured under different lighting conditions. The scientists manipulated factors such as brightness and color temperature, creating a dataset comprising 675 images that reflected varying degrees of freshness and browning.

Utilizing these assessments, the team trained machine-learning models to predict human grading based on the sensory evaluations. By aligning machine predictions more closely with human sensory data, the researchers demonstrated that prediction errors could be reduced by approximately 20%. This promising result signifies a potential shift in how food quality assessments may be conducted in the future.

Despite the success of previous machine learning techniques in food engineering, many existing systems have been critiqued for their reliance on “human-labeled ground truths” or simplistic color metrics. The challenge lies in their inability to accommodate varying lighting conditions, which can heavily influence human perceptions of color and quality. This study underscores that training models without considering these biases ultimately compromises their reliability.

As the study reveals, a rectification is necessary: future algorithms must incorporate the variances introduced by human perception in response to environmental factors. This nuanced understanding can lead to the development of more robust machine learning models capable of performing in real-world scenarios where lighting conditions are far from ideal.

The implications of Wang’s research extend beyond the agricultural sector. By refining machine vision systems, the methodologies derived from this study have possible applications in various fields, from jewelry assessment to quality control in manufacturing. As the technology evolves, it may pave the way for consumer-oriented applications, such as mobile apps that assist shoppers in discerning the quality of produce by simply taking a picture with their smartphones.

Moreover, grocery stores could leverage insights from this research to reorganize their displays in ways that enhance the appeal and perceived quality of their offerings. Optimized lighting configurations might significantly affect consumer perception at the point of sale, ultimately increasing sales of fresher products.

The intersection of human perception and machine learning presents a fertile ground for innovation in food quality assessment. As researchers continue to develop algorithms that account for the variances in human sensory evaluations, the potential for more reliable and consistent food quality predictions grows. This balance between human intuition and machine precision holds the promise of revolutionizing how we perceive and interact with the food we consume. As we look to the future, the insights gained from studies like Wang’s work remind us of the immense potential waiting to be unlocked at the intersection of technology and human experience.

Leave a Reply