In the era of artificial intelligence, particularly with the rise of large language models (LLMs), one of the foremost challenges is ensuring accuracy in responses. LLMs are powerful tools capable of generating human-like text based on the input they receive. However, their proficiency can falter, particularly when asked complex or domain-specific questions. This limitation often necessitates a collaborative approach to problem-solving, akin to how humans might seek specialized knowledge from a colleague when faced with a tricky question. The deficiency in recognizing when to “phone a friend,” or, in the case of LLMs, another model, has sparked the need for innovative solutions to enhance the accuracy of AI-generated responses.

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed an intriguing algorithm dubbed “Co-LLM.” This system serves to facilitate collaboration between a general-purpose LLM and an expert model specialized in a particular domain. Unlike traditional methods that deploy rigid frameworks or rely heavily on labeled datasets to address collaboration, Co-LLM adopts a more nuanced approach, functioning organically to enhance the synergy between models.

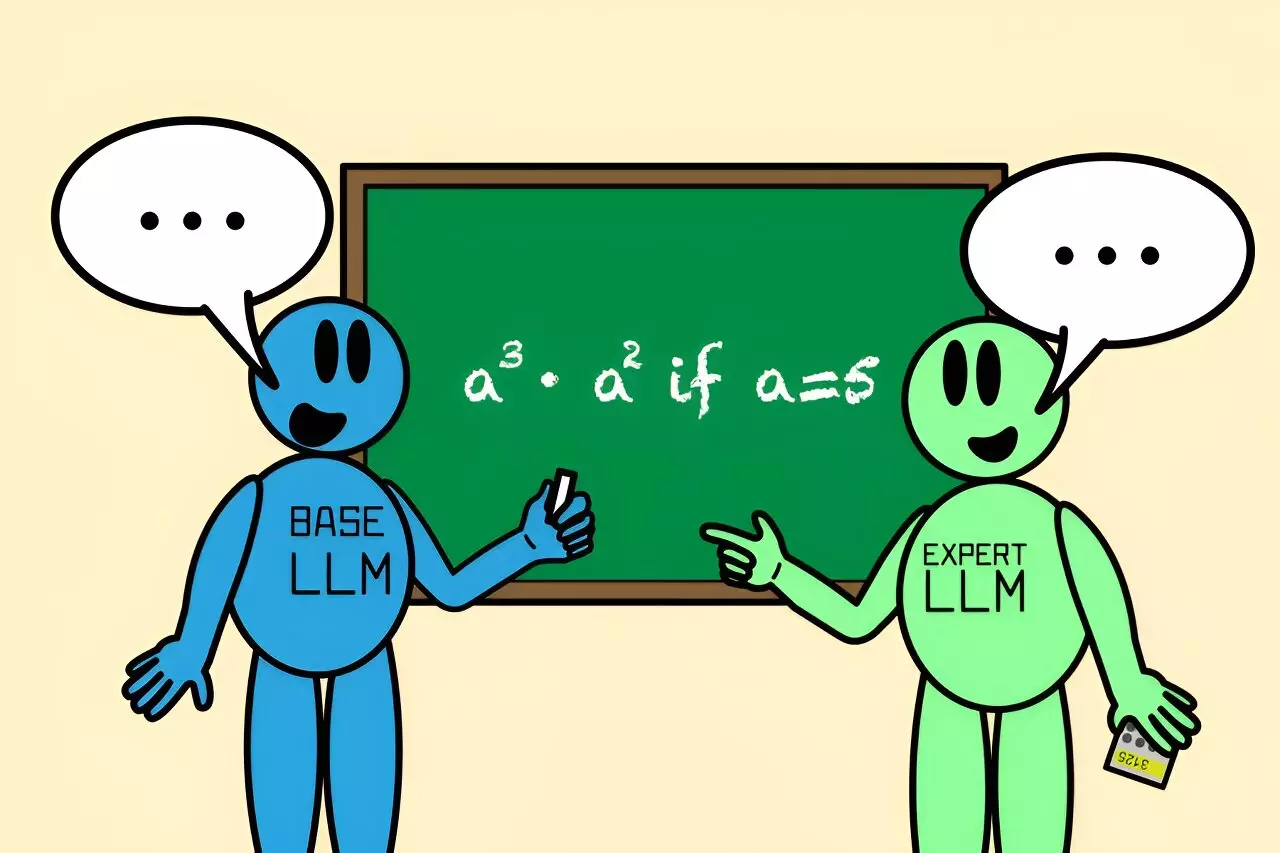

The novel mechanism behind Co-LLM allows the base LLM to initiate an answer while progressively evaluating its output for areas that may require the input of a specialized model. This is executed through the application of a “switch variable,” which acts like a project manager, identifying the complexity of different segments of the response and determining where assistance is needed from the expert model. This dual engagement not only fosters a more accurate response but also streamlines the response process by utilizing the expert model only when it’s necessary.

To clarify the operational framework of Co-LLM, consider how it handles a complex query, such as “What are some examples of extinct bear species?” The process begins with the general-purpose LLM generating an initial response. While this response is being formulated, the switch variable meticulously analyzes each word or token produced. Where the general model lacks specifics—such as details about the extinction timeline—the switch prompts the expert model to engage and provide those essential details. This collaborative effort ensures the answer is not only comprehensive but also rich in relevant data, thus enhancing the overall factual accuracy.

This interaction paradigm stands in stark contrast to traditional fine-tuning methodologies. Instead of modifying models repeatedly to fit data intricacies, Co-LLM organically encourages collaboration at a more profound level, mirroring human tendencies to seek expert knowledge when necessary.

Potential Applications Across Various Domains

One of the most exciting aspects of the Co-LLM approach is its adaptability across different fields. For instance, in the medical domain, a general-purpose LLM could struggle to correctly identify the components of an intricate prescription drug. By invoking an expert model trained on medical literature, Co-LLM can rectify this by ensuring the response is accurate and reliable. The capability of Co-LLM is evident in its successful pairing with various expert models, such as Meditron, enabling accurate answers to complex biomedical queries, thus illuminating the potential real-world applications of this technology.

In mathematical inquiries, the efficacy of collaboration becomes particularly poignant. For example, when tasked with a seemingly straightforward problem like “a^3 · a^2 if a=5,” a general LLM may mistakenly arrive at an answer of 125. However, by engaging Co-LLM alongside a specialized math model like Llemma, the combined expertise leads to the correct result of 3,125, illustrating the enhanced accuracy achievable through cooperative efforts.

Future Directions and Enhancements for Co-LLM

While the Co-LLM framework showcases significant advancements in LLM collaboration, ongoing research aims to further enhance its effectiveness. One proposed improvement involves integrating human-like self-correction mechanisms, which would allow the system to adjust and refine its outputs based on feedback received from the expert model. Such a capability would enable Co-LLM to retreat and revise when the expert model yields incorrect responses, thereby enhancing trust in automated systems.

Additionally, keeping the expertise of the models current and relevant is crucial. The researchers aspire to facilitate the updating of expert models by way of continually educating the base model, ensuring information stays up-to-date. This real-time adaptability could ultimately position Co-LLM as a vital tool for maintaining enterprise documents or other essential datasets.

Co-LLM marks a noteworthy step towards redefining collaborative strategies in artificial intelligence. By implementing a model-token level routing approach, it enhances computational efficiency while improving factual accuracy. This development not only signifies a leap in the functionality of LLMs but also reflects a thoughtful imitation of human collaborative behavior. As the field of artificial intelligence continues to evolve, the principles behind Co-LLM could lay the groundwork for more intelligent and effective AI systems, capable of navigating the complexities of specialized knowledge with ease.

Leave a Reply