The evolution of artificial intelligence (AI) has been marked by fierce competition and rapid advancements. Among the fresh contenders in this highly dynamic field, DeepSeek—a startup rooted in innovative open-source solutions—has recently unveiled its latest ultra-large model, DeepSeek-V3. This release not only signifies a pivotal moment for DeepSeek but also represents a significant juncture in the ongoing discourse between open-source and closed-source AI technologies.

DeepSeek-V3 boasts an impressive 671 billion parameters, facilitated by a mixture-of-experts (MoE) architecture. This design allows the model to engage only selected parameters, thus enhancing its ability to execute specific tasks efficiently and accurately. This strategy ensures that even with a massive parameter count, the model operates optimally by activating only those components that are necessary for the task at hand.

What’s particularly noteworthy about DeepSeek-V3 is its ability to outperform established open-source models, such as Meta’s Llama 3.1-405B, while closely competing with respected closed-source models from AI giants like OpenAI and Anthropic. This competitive edge makes it a compelling option for enterprises and developers looking for advanced AI capabilities without the constraints often associated with proprietary systems.

DeepSeek-V3 is not merely an iteration of its predecessor, DeepSeek-V2; it incorporates two major innovations that push boundaries further. The first is an auxiliary loss-free load-balancing strategy, which efficiently allocates tasks within the model. By dynamically monitoring expert resources, this innovative approach balances the load, ensuring that model performance does not waver during operation.

The second significant contribution is the multi-token prediction (MTP) feature. This allows DeepSeek-V3 to anticipate and generate multiple future tokens concurrently, amplifying the model’s training efficiency and tripling its speed—achieving a staggering output of 60 tokens per second. This capability not only enhances application in real-world scenarios but also suggests an advancement in AI’s natural language processing capabilities.

Training such a massive model comes with challenges, yet DeepSeek has strategically navigated this process. Utilizing 14.8 trillion diverse tokens during the pre-training phase allowed for thorough exposure and learning. The company implemented a two-stage extension of context length, expanding it from an initial 32,000 tokens to a remarkable 128,000 in subsequent phases.

Moreover, the refinement of the model included post-training efforts such as Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL). These techniques are aimed at aligning the model more closely with human preferences, a crucial aspect of making AI more intuitive and user-friendly. This process also benefited from optimizations in hardware and algorithms, making the training process significantly more economical—completing within 2788K GPU hours at a cost of approximately $5.57 million, a fraction of what many competing models require.

The performance metrics showcased by DeepSeek-V3 are particularly illuminating. According to extensive benchmarking, the model decisively surpassed competitors like Llama-3.1-405B and Qwen 2.5-72B, and although it did trail slightly behind Anthropic’s Claude 3.5 Sonnet across some tasks, it excelled in niche areas such as Chinese language tasks and mathematical reasoning. For example, the model achieved an impressive score of 90.2 in the Math-500 test, significantly outperforming other models in this domain.

The intelligence exhibited by DeepSeek-V3 underscores the closing gap between open-source and closed-source AI. Such developments signal a growing trend where no single entity dominates the landscape, setting the stage for diversified AI solutions that can cater to various enterprise needs.

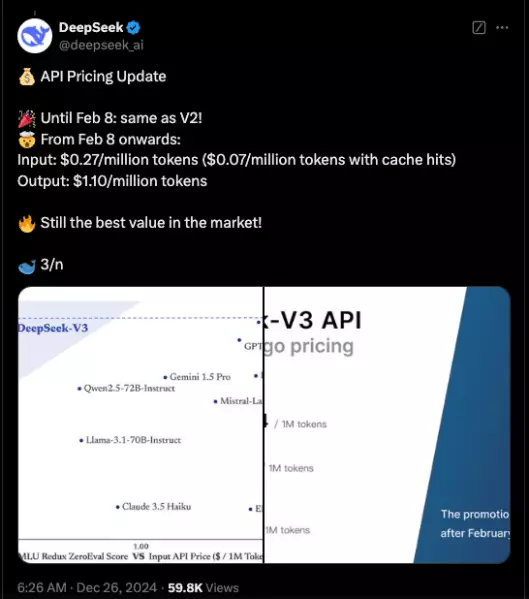

With the code for DeepSeek-V3 made available under an MIT license and its API provided at competitive rates, access to advanced AI technologies for businesses and developers has never been more feasible. This move not only democratizes access to robust AI capabilities but also enables broader experimentation and innovation across different sectors, thus promising enhanced growth and application of artificial intelligence.

As DeepSeek continues to advance, the implications are clear: AI is increasingly becoming a field of shared knowledge and open development. The unveiling of DeepSeek-V3 raises the bar significantly, as organizations begin to weigh the benefits of open-source alternatives against established proprietary models. Whether DeepSeek-V3 will pave the way for a new standard in AI or merely add to the plethora of options remains to be seen; however, one thing is certain: the future of artificial intelligence is open-source, and DeepSeek is at the forefront of this transformative journey.

Leave a Reply