In recent years, the advancements in artificial intelligence (AI) have been nothing short of remarkable. However, when we shift our focus to robotics, the narrative takes a different turn. Despite significant strides, many robots, especially those in industrial settings, continue to operate within restrictive parameters that limit their effectiveness. These machines often execute predefined sequences within factories and warehouses, showcasing a lack of adaptability to their surroundings. This rigidity signifies a broader challenge: the absence of what can be termed “general physical intelligence.” The repercussions are evident; while some robots can analyze visual data or manipulate objects, their capabilities usually extend to a narrow set of tasks lacking finesse.

For robots to realize their potential in various sectors, especially industry, they require a broader skill set. Current paradigms often condition robots to train for specific tasks in isolation; this presents an inherent limitation. However, there is hope on the horizon. Innovators, like those at Tesla, envision high-functioning humanoid robots such as “Optimus,” projected to be available within the next couple of decades. Elon Musk anticipates a price point conducive to widespread adoption, indicating a belief that versatile robots could soon become commonplace, able to perform a wide array of functions.

A paradigm shift is occurring in robotics education as recent studies indicate that the transfer of learning across different tasks may be achievable. This aligns with projects like Google’s Open X-Embodiment, where collaborative efforts between multiple research labs have shown that experience gained by one robot can inform the training of another. Such interconnected learning systems could dramatically enhance robot versatility, positioning them to handle tasks that require adaptive thinking and agility.

However, the road ahead is fraught with difficulties. One of the most pressing challenges is that there isn’t an equivalent dataset of robotic performance available as is seen with language models built on extensive textual data. Companies like Physical Intelligence face the daunting task of generating their own training data. The disparity between the data required for effective learning in robotics versus that which is readily available for AI language models cannot be overstated.

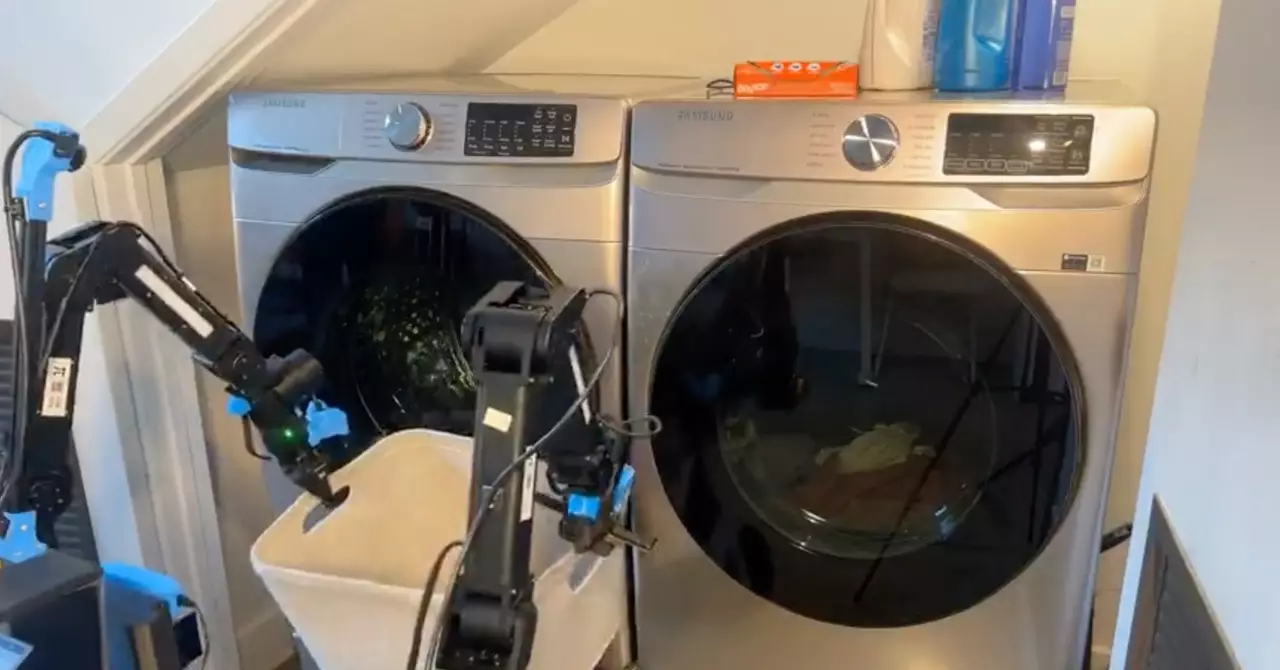

Physical Intelligence’s novel approach combines vision-language models, which leverage both visual and textual data, with diffusion modeling techniques originally designed for image generation. By intertwining these methodologies, the company seeks to facilitate a more generalized learning mechanism for robots. Nonetheless, the ultimate goal is not merely to create isolated advancements but to construct a cohesive framework that enables robots to tackle any task assigned by human users.

Looking Toward the Future

While significant hurdles remain, the journey towards creating more intelligent and adaptable robots is underway. The hope is that these foundational developments act as a scaffolding – a preliminary structure that suggests a more capable future. As researchers continue to innovate, the aspiration for robots to seamlessly integrate into homes and industries becomes increasingly attainable. It is crucial to remain vigilant and committed to addressing the challenges ahead, as the future of robotics rests not only on technological advancements but also on cultivating an understanding of the nuances of human environments and tasks.

Leave a Reply