Deep learning, an advanced branch of artificial intelligence, is revolutionizing various domains such as healthcare, finance, and autonomous systems. However, one of the major challenges it faces is its dependence on cloud computing resources. Although these resources enable the processing of vast amounts of data, they also introduce significant security vulnerabilities—especially when it comes to sensitive information like patient data in healthcare settings. In this article, we explore a pioneering security protocol developed by researchers at MIT that leverages quantum computing principles to address these vulnerabilities while maintaining data integrity and deep learning accuracy.

As deep learning models become increasingly complex, the amount of data required for their training and deployment has surged. This demand is often met through cloud computing services that offer scalable computational power. However, with this convenience comes the pressing concern of data security. Healthcare institutions, for instance, are often reticent to process confidential patient information through AI-driven tools due to fears surrounding data breaches and unauthorized access. This creates a critical barrier to the adoption of innovative solutions that could significantly improve patient outcomes.

The reality is that when two parties—such as a client with sensitive data and a server running a deep learning model—interact, both parties have a vested interest in keeping their information confidential. For instance, a hospital may want to use a deep learning model to assess whether a medical image indicates cancer, but the patient’s data must remain private. Yet, current digital systems can be exploited by malicious actors who may copy the data exchanged between these entities, leading to potential privacy violations.

Quantum Mechanics as a Solution

To combat these challenges, MIT researchers have introduced a novel quantum-safe encryption protocol. By utilizing the unique properties of quantum mechanics, specifically the no-cloning principle, they have developed a method that ensures data sent between the client and the server remains secure without compromising the accuracy of deep learning models.

In essence, this protocol encodes data into the light transmitted through fiber optic networks. The use of laser light allows information to be securely processed while minimizing the chance of interception or replication. Unlike classical information, which can be easily copied, quantum information is subject to strict physical laws, rendering unauthorized duplication impossible. This paradigm shift in security opens new avenues for safely leveraging artificial intelligence, particularly in sensitive fields.

How the Protocol Works

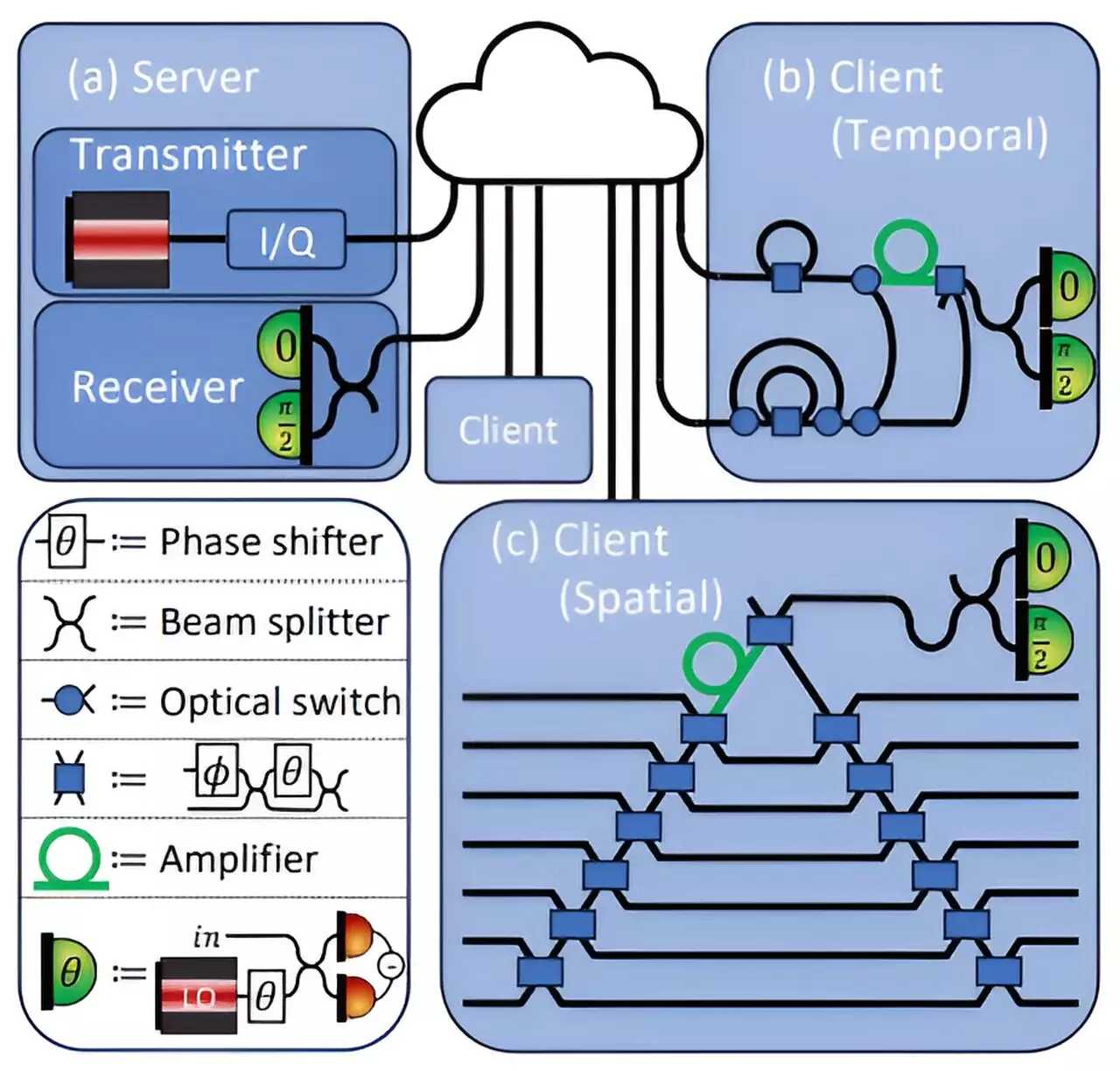

The researchers designed their security protocol to facilitate a two-way interaction between a client, who possesses private data, and a server that holds a deep learning model. During this interaction, the server encodes the model’s weights—critical components that enable the deep learning process—into the laser light. The client then uses this information to derive predictions from their sensitive data.

The genius behind this system lies in its inherent design: the client can only access the information necessary for their analysis, while the server safeguards its proprietary model. This is accomplished by ensuring that the client cannot copy the model’s weights due to quantum constraints. Instead of analyzing all incoming data, the client measures just what they require to generate results, sending back any residual light to the server for verification. This process not only preserves the model’s confidentiality but also mitigates the risk of data leakage.

Testing revealed that this cutting-edge approach maintained an impressive 96% accuracy for the deep learning model while providing a robust security framework. The research highlights how minimal information leakage occurs, with only a fraction of what would be needed to compromise the model or the underlying data being exposed. The implications of such findings are profound, indicating a significant stride toward privacy-preserving AI.

Looking ahead, the researchers aim to explore additional applications for their protocol, particularly within federated learning setups where multiple entities collaborate to train a model without sharing their data directly. Furthermore, potential adaptations for quantum operations, as opposed to traditional computational strategies, could enhance both accuracy and security in future implementations.

A New Paradigm for Privacy in AI

This groundbreaking work brings together disparate fields—deep learning and quantum cryptography—to create a security model that not only ensures the privacy of user data but also preserves the essential capabilities of deep learning technologies. As we move toward an increasingly interconnected world where artificial intelligence plays a pivotal role, integrating these advanced security protocols will be crucial for fostering trust and encouraging the widespread acceptance of AI solutions.

As researchers continue to optimize and expand upon these ideas, the hope is that we will see the fusion of quantum technology with artificial intelligence emerge as a cornerstone of secure, high-performance computing in the near future. The MIT team’s work exemplifies how innovative approaches can address existential challenges in data security, ushering in a new era for artificial intelligence applications across various sectors.

Leave a Reply