AI large language models (LLMs) such as GPT-4 have revolutionized the landscape of natural language processing by proving invaluable for various applications, from chatbots and translation services to creative text generation and coding assistance. Despite their impressive capabilities, recent research has unveiled a striking “Arrow of Time” phenomenon that raises questions about our understanding of language and the limitations of these models. In this article, we delve into the implications of this discovery and its broader significance in fields ranging from machine learning to cognitive science.

The term “Arrow of Time” refers to a concept in physics that describes the one-way direction of time, leading from past to present to future. Interestingly, researchers—led by Professor Clément Hongler from the École Polytechnique Fédérale de Lausanne (EPFL) and Jérémie Wenger from Goldsmiths, University of London—have found that this principle also manifests within the predictive capabilities of LLMs. Investigation into LLMs’ ability to forecast upcoming words revealed a clear trend: these models are significantly more adept at predicting what comes next in a sentence compared to what preceded it. Analyzing various model architectures, including Generative Pre-trained Transformers (GPT), Gated Recurrent Units (GRU), and Long Short-Term Memory (LSTM) networks, the researchers found a uniform bias in prediction performance favoring forward-looking assessments.

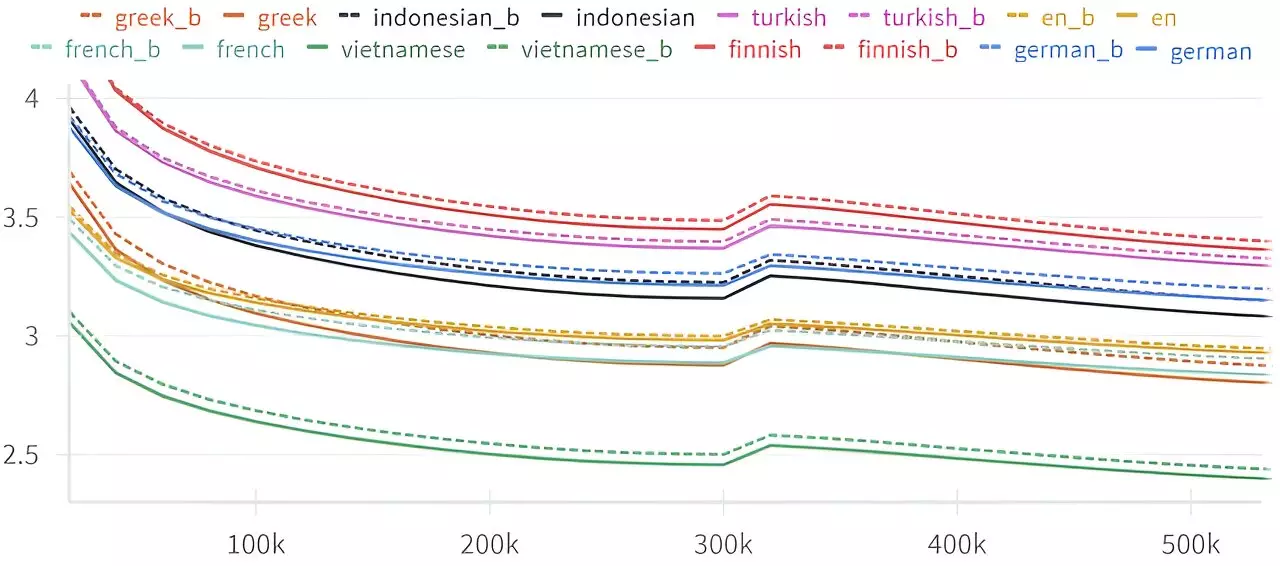

The implication here is profound. The results indicated that LLMs consistently perform worse when tasked with backward prediction—attempting to ascertain a previous word from subsequent ones—by a few percentage points across different models and languages. This systematic bias suggests an inherent asymmetry in how these models process textual information, prompting a reevaluation of the foundational principles underlying natural language understanding in AI.

This phenomenon is reminiscent of the findings of Claude Shannon, often hailed as the father of Information Theory. In his 1951 work, Shannon explored the relative difficulty of predicting a sequence of letters both forwards and backwards. While his theoretical analysis posited that both tasks should carry equal weight in complexity, empirical evidence indicated that humans naturally found backward predictions more challenging. The revelation that LLMs exhibit a similar struggle adds a layer of complexity to our comprehension of machine intelligence relative to human cognition.

Professor Hongler’s assertion that LLMs exhibit sensitivity to the direction of time during language processing provokes deeper questions regarding the fundamental structure of language itself. If language understanding is—at least to some extent—a function of temporal direction, what does that mean for the development and fine-tuning of future LLMs? Can next-gen models be engineered to compensate for this backward-prediction bias?

Practical Implications and Future Research Directions

Moreover, the discovery might have broader applications beyond theoretical linguistics. The researchers suggest that the understanding of the Arrow of Time could serve as an indicator of intelligence or life when analyzing information-processing agents. In a world where the distinction between AI and human-like capabilities is increasingly blurred, further inquiry into these aspects could inform the design of even more sophisticated LLMs.

Additionally, this phenomenon resonates with age-old inquiries in physics regarding the nature of time. If the study of language models can shed light on how we perceive the passage of time, it may unlock new avenues in cognitive science and neuroscience. As researchers continue to unravel the implications of temporal directionality in language processing, we can expect the insights to spill over into explorations of memory, causality, and the very fabric of reality as we comprehend it.

The context surrounding the study also enriches its narrative. What began as a collaborative endeavor to develop a chatbot for improvisational theater evolved into a profound examination of language and cognition. In creating a chatbot that needed to construct narratives from predetermined endings, the research team inadvertently stumbled upon this intriguing aspect of language models. As practitioners of the arts often seek a nuanced understanding of storytelling, the feedback from this theatrical project fostered an innovative approach to machine learning—evidencing that the intersection of diverse fields can yield rich insights.

The discovery that LLMs are more proficient at predicting future language constructs rather than past ones introduces an array of intellectual challenges. It compels both the AI and linguistic communities to question the underlying structures of language, cognition, and the very essence of time. As our exploration continues, it becomes increasingly clear that the Arrow of Time, while rooted in physics, finds its echo in the digital minds of our leading AI models, reshaping the landscape of language understanding.

Leave a Reply